The Agent: Reasoning, Planning, and Action

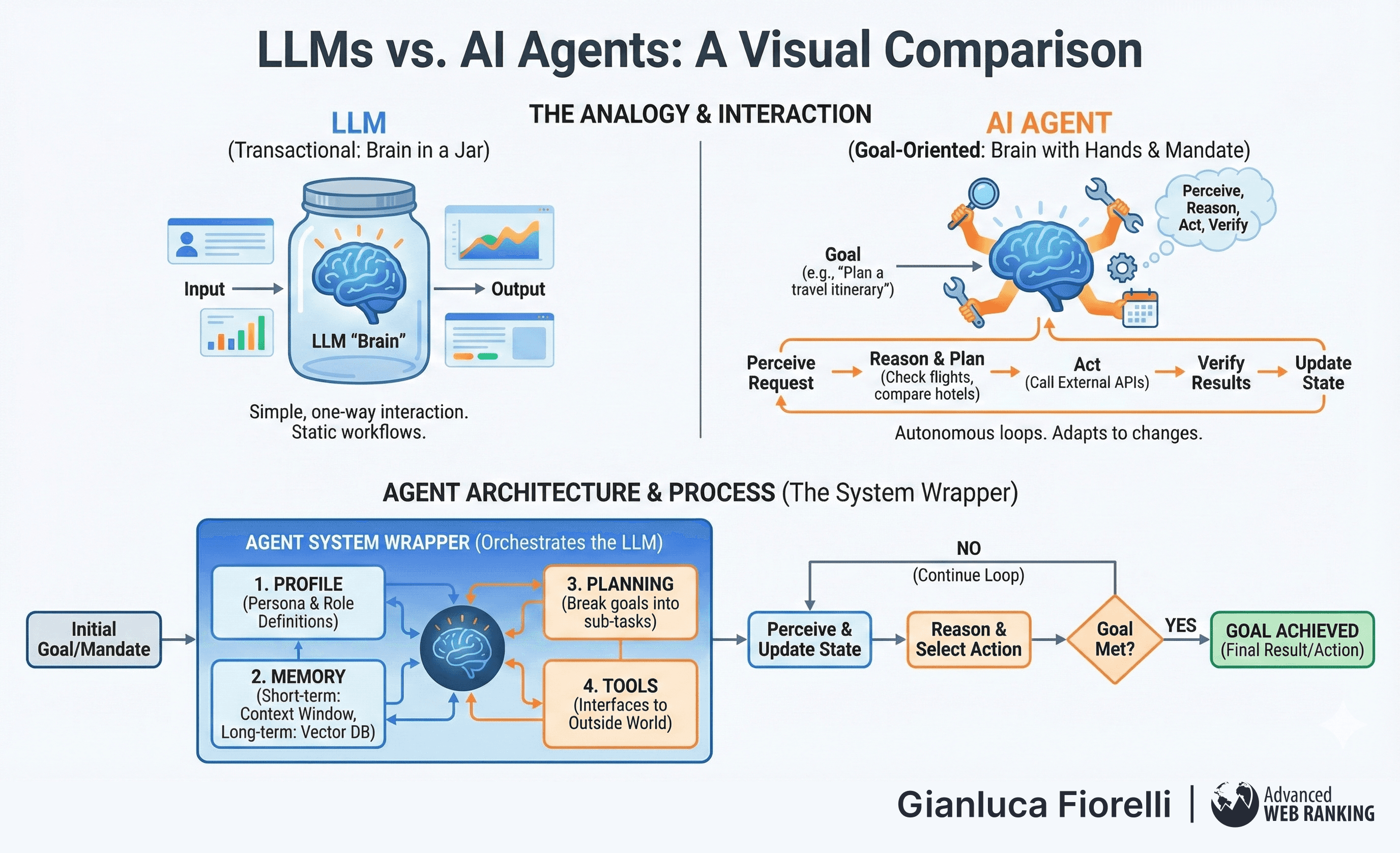

As we move from the architecture of the model to its application, we encounter the realm of Agentic AI. Here, the LLM transitions from a passive text generator to an active problem solver, capable of using tools, planning workflows, and executing complex tasks autonomously.

The AI Agent

Alternative Nomenclature: Autonomous Agent, Agentic System.

The Conceptual Framework:

If an LLM is a "brain in a jar," an Agent is that brain equipped with hands and a mandate.

A standard LLM interaction is transactional: input leads to output.

An Agentic interaction is goal-oriented.

If you ask an Agent to "Plan a travel itinerary," it does not simply hallucinate a list of hotels. It perceives the request, reasons about the necessary steps (check flights, compare hotels, check calendar), acts by calling external APIs, and verifies the results.

Inside the “Black Box”:

An Agent is a system wrapper that orchestrates the LLM. Its architecture generally consists of four components:

Profile: The persona and role definitions.

Memory: Both short-term (context window) and long-term (Vector DB) to store interaction history.

Planning: The ability to break high-level goals into sub-tasks.

Tools: The interfaces that allow the agent to interact with the outside world.

Agents function in loops, continuously perceiving the environment and updating their state until the goal is met. This autonomy distinguishes them from static workflows, allowing them to adapt to unexpected errors or changing information.

Tool Calling and Function Calling

Alternative Nomenclature: API Integration, Tool Use.

The Conceptual Framework:

This is the mechanism that empowers an AI to admit ignorance and seek external help.

Imagine asking an assistant, "What is 4,932 multiplied by 12,391?"

Instead of guessing, the assistant pulls out a calculator.

In the context of AI, the "calculator" could be a Python interpreter, a Google Search API, or a corporate SQL database.

The AI does not execute the tool itself; rather, it generates a structured instruction (like a function call), which the system executes, returning the result to the AI for synthesis.

Inside the “Black Box”:

Modern LLMs are fine-tuned to detect when a user's query requires an external tool.

When triggered, the model outputs a structured object (typically JSON) containing the tool name and arguments (e.g., {"tool": "weather_api", "location": "Paris"}).

The application layer parses this, calls the actual API, and feeds the output back to the LLM as a new "observation".

This capability transforms the LLM from a text generator into an orchestrator of software. However, it introduces security risks; "prompt injection" attacks can potentially trick an agent into executing destructive tools, necessitating rigorous permission scoping.

Reasoning Frameworks: CoT, ToT, and ReAct

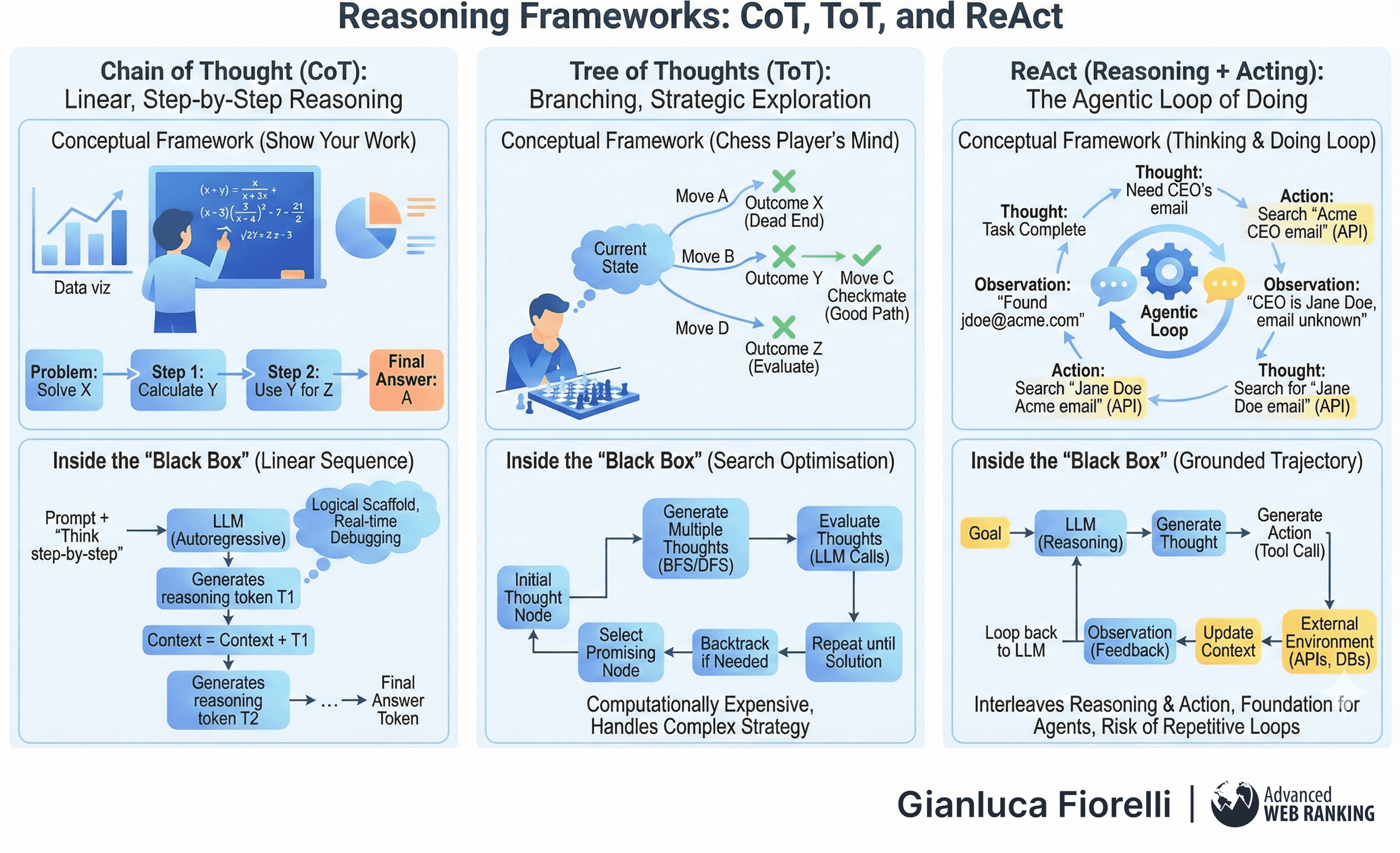

To function effectively as agents, LLMs must employ advanced reasoning strategies that go beyond simple token prediction.

Chain of Thought (CoT)

Conceptual Framework:

This is equivalent to asking a math student to "show their work." When an LLM attempts to solve a complex logic puzzle in one go, it often fails.

However, prompting it to "think step-by-step" forces it to externalise its reasoning process. It writes out intermediate steps ("First, I calculate X... Then, I use X to find Y..."), which creates a logical scaffold for the final answer.

Inside the “Black Box”:

CoT works by transforming a non-linear reasoning problem into a linear sequence of causal tokens. Because the model is autoregressive, the generated reasoning tokens become part of the context for the subsequent tokens, effectively "debugging" the thought process in real-time and significantly reducing logic errors.

Tree of Thoughts (ToT)

Conceptual Framework:

If Chain of Thought is a linear path, Tree of Thoughts is a branching map of possibilities.

It mimics the way a chess player thinks: "If I move here, X happens... but if I move there, Y happens."

ToT allows the model to explore multiple reasoning paths simultaneously, self-evaluate the promise of each path, and backtrack if a path leads to a dead end.

Inside the “Black Box”:

ToT treats reasoning as a search optimisation problem.

It uses algorithms like Breadth-First Search (BFS) or Depth-First Search (DFS) to navigate the space of "thoughts."

While computationally expensive - requiring multiple LLM calls to evaluate different branches - it enables the solution of complex strategic problems that linear reasoning cannot handle.

ReAct (Reasoning + Acting)

Conceptual Framework:

ReAct is the standard loop for agentic behaviour, combining "Thinking" and "Doing." A ReAct agent follows a strict trace:

Thought: "I need to find the CEO of Acme Corp."

Action: Search ["current CEO of Acme Corp"]

Observation: "Results show the CEO is Jane Doe."

Thought: "Now I need to find her email."

This loop prevents the model from hallucinating actions or acting without context, synchronising its internal logic with external reality.

Inside the “Black Box”:

By interleaving reasoning traces with action executions, ReAct agents maintain a grounded trajectory. This approach is the foundation of popular frameworks like LangChain, though it is susceptible to getting stuck in repetitive loops if not monitored.

Article by

Gianluca Fiorelli

With almost 20 years of experience in web marketing, Gianluca Fiorelli is a Strategic and International SEO Consultant who helps businesses improve their visibility and performance on organic search. Gianluca collaborated with clients from various industries and regions, such as Glassdoor, Idealista, Rastreator.com, Outsystems, Chess.com, SIXT Ride, Vegetables by Bayer, Visit California, Gamepix, James Edition and many others.

A very active member of the SEO community, Gianluca daily shares his insights and best practices on SEO, content, Search marketing strategy and the evolution of Search on social media channels such as X, Bluesky and LinkedIn and through the blog on his website: IloveSEO.net.